🍓 𝘌𝘷𝘦𝘳 𝘸𝘰𝘯𝘥𝘦𝘳𝘦𝘥 𝘩𝘰𝘸 𝘮𝘢𝘯𝘺 𝘙'𝘴 𝘢𝘳𝘦 𝘪𝘯 "𝘴𝘵𝘳𝘢𝘸𝘣𝘦𝘳𝘳𝘺"? 𝘞𝘦𝘭𝘭, 𝘊𝘩𝘢𝘵𝘎𝘗𝘛 𝘴𝘶𝘳𝘦 𝘥𝘪𝘥—𝘢𝘯𝘥 𝘨𝘰𝘵 𝘪𝘵 𝘩𝘪𝘭𝘢𝘳𝘪𝘰𝘶𝘴𝘭𝘺 𝘸𝘳𝘰𝘯𝘨!

I recently came across a viral reel where someone asked ChatGPT how many R’s are in the word “strawberry.” You’d think it’s an easy question right? Turns out, our friendly AI managed to confuse the simple answer.

🤖 Instead of confidently saying “3”, ChatGPT fumbled. It was a classic case of AI showing that it’s not perfect. This blunder had everyone laughing and sharing their own funny AI mistakes.

🧑🏭 So, 𝗵𝗼𝘄 𝗱𝗼 𝗟𝗟𝗠𝘀 𝘄𝗼𝗿𝗸?

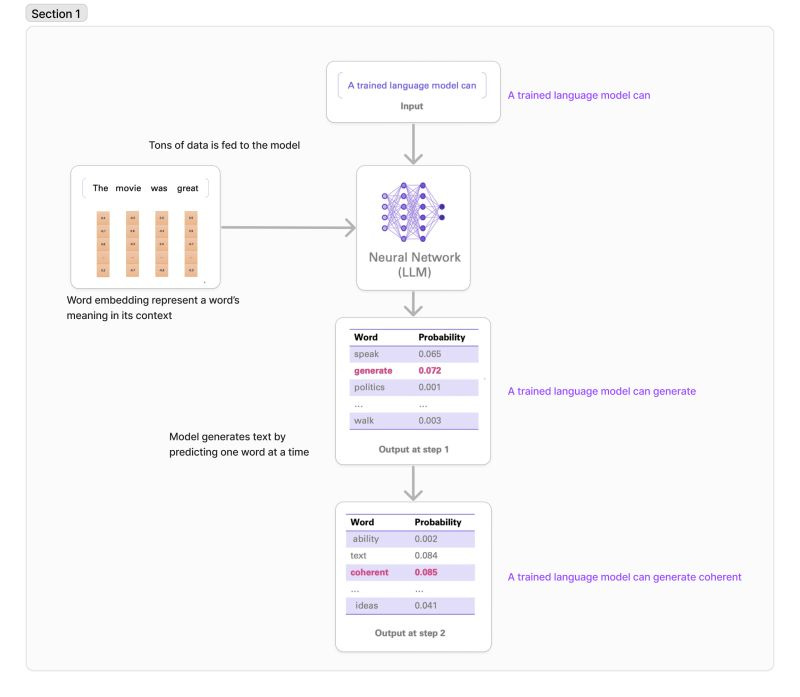

Large Language Models (LLMs) like ChatGPT learn by analyzing massive amounts of text data. They 𝗱𝗼𝗻’𝘁 “𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱” 𝘄𝗼𝗿𝗱𝘀 𝗹𝗶𝗸𝗲 𝘄𝗲 𝗱𝗼; they 𝗿𝗲𝗰𝗼𝗴𝗻𝗶𝘇𝗲 𝗽𝗮𝘁𝘁𝗲𝗿𝗻𝘀 in vast amounts of text. When you ask a question, the model generates a response based on those patterns, 𝗽𝗿𝗲𝗱𝗶𝗰𝘁𝗶𝗻𝗴 𝘁𝗵𝗲 𝗺𝗼𝘀𝘁 𝗹𝗶𝗸𝗲𝗹𝘆 𝗮𝗻𝘀𝘄𝗲𝗿 𝗶𝗻 𝘁𝗵𝗲 𝗳𝗼𝗿𝗺 𝗼𝗳 𝘁𝗵𝗲 𝗻𝗲𝘅𝘁 𝘄𝗼𝗿𝗱 𝗼𝗿 𝘀𝗲𝗻𝘁𝗲𝗻𝗰𝗲.

🤿 But let's dive deeper: these models use advanced algorithms and 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲𝘀, 𝗹𝗶𝗸𝗲 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀, to handle the complexity of language. The Transformer architecture employs mechanisms called 𝗮𝘁𝘁𝗲𝗻𝘁𝗶𝗼𝗻 𝗵𝗲𝗮𝗱𝘀, which allow the 𝗺𝗼𝗱𝗲𝗹 𝘁𝗼 𝗳𝗼𝗰𝘂𝘀 𝗼𝗻 𝘃𝗮𝗿𝗶𝗼𝘂𝘀 𝗽𝗮𝗿𝘁𝘀 𝗼𝗳 𝘁𝗵𝗲 𝗶𝗻𝗽𝘂𝘁 𝘁𝗲𝘅𝘁 𝘁𝗼 𝗯𝗲𝘁𝘁𝗲𝗿 𝗴𝗿𝗮𝘀𝗽 𝘁𝗵𝗲 𝗰𝗼𝗻𝘁𝗲𝘅𝘁. Each layer in the Transformer processes the text iteratively, progressively refining its interpretation and generating more accurate responses at each step.

However, because they lack true comprehension, they sometimes stumble on seemingly simple tasks. 𝗧𝗵𝗲𝗶𝗿 "𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱𝗶𝗻𝗴" 𝗶𝘀 𝘀𝘁𝗮𝘁𝗶𝘀𝘁𝗶𝗰𝗮𝗹, 𝗻𝗼𝘁 𝘀𝗲𝗺𝗮𝗻𝘁𝗶𝗰. This means that while they excel at generating human-like text, they can make mistakes that a human would easily avoid.

Also, to figure out how many R's are in "strawberry," a simple regex-based pattern search in a 2-line code snippet can solve that instantly. This highlights the gap between specific tasks and the general capabilities of LLMs. While these models can fumble on simple questions, they excel in more complex applications and in enabling new possibilities.

Have you had any funny AI interactions? Share in the comments below.